The AI world just got a major shake-up, and it’s all thanks to Pruna AI. On March 20, 2025, this European startup made headlines by open-sourcing its AI model optimization framework—a move called a stunning win for efficiency. With AI open source solutions gaining traction, Pruna AI’s decision to share its tech for free is a game-changer for developers, businesses, and anyone looking to make AI faster, smaller, and greener. Let’s unpack what this means, how it works, and why it’s a big deal in 2025.

What’s the Buzz About Pruna AI?

Pruna AI isn’t a household name—yet—but it’s quickly earning a reputation as a leader in AI efficiency. Founded in 2023 by experts, including Bertrand Charpentier and John Rachwan, this Paris- and Munich-based company has been quietly building tools to tackle a common AI headache: bloated, resource-hungry models. Their solution? An optimization framework that squeezes models down to size without sacrificing quality. With their AI open source release on March 20, 2025, Pruna AI is letting everyone in on the action.

Announced via TechCrunch and celebrated across platforms like X, this framework uses tricks like pruning, quantization, and caching to make AI models leaner and meaner. The best part? It’s free for anyone to download, tweak, and use—whether you’re a solo coder or a big corporation. This AI open source move isn’t just about sharing code; it’s about setting a new standard for running AI in a world where speed and sustainability matter more than ever.

How Pruna AI’s Framework Works

So, what’s under the hood of this AI open-source gem? Pruna AI’s framework is like a toolbox for making AI models more efficient. It takes complex models—think large language models (LLMs) or image generators—and trims the fat. Here’s how it pulls off this stunning win:

1. Pruning the Excess

Think of pruning like trimming a bush. Pruna AI removes parts of a model that don’t pull their weight, keeping the core performance intact. This makes models smaller and faster, perfect for running on everyday hardware instead of pricey supercomputers.

2. Quantization for Speed

Quantization sounds fancy, but it’s simple: it lowers the precision of a model’s numbers. Less precision means less memory and quicker calculations. Pruna AI’s framework fine-tunes this process so you don’t lose accuracy while gaining speed—a key perk of this AI open source tool.

3. Caching Smarts

Did you notice how your phone loads apps faster the second time? That’s caching. Pruna AI uses it to store frequently used data, cutting down on repeat work. This boosts efficiency, especially for models churning out many responses, like chatbots or video generators.

4. Easy Mixing and Matching

Unlike other tools that focus on one method, Pruna AI combines these techniques—and more—into a single, user-friendly package. Developers can tweak settings or let Pruna’s upcoming “compression agent” pick the best combo automatically. It’s AI open source flexibility at its finest.

The result? Models that are up to 10 times smaller and faster, with minimal quality loss. For example, Pruna AI shrank a Llama model eightfold without breaking a sweat, saving compute power and cash.

Why Open Source Matters in 2025

The AI open source trend isn’t new—think Meta’s Llama or IBM’s Granite models—but Pruna AI’s release stands out. Why? Because it’s not just about sharing; it’s about solving real problems. AI models are getting bigger, and running them is getting pricier. Companies like OpenAI use tricks like distillation to keep costs down, but those solutions are locked behind paywalls. Pruna AI flips the script by freeing its framework, levelling the playing field for everyone.

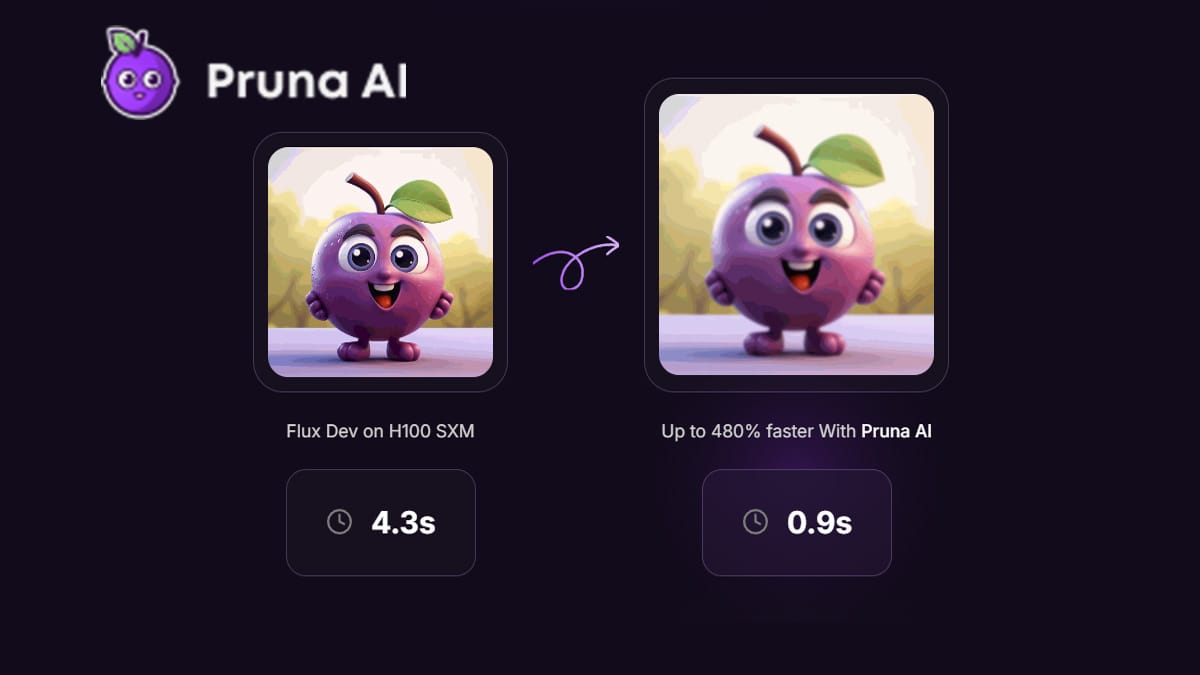

This AI open source win comes at a perfect time. Posts on X from March 19, 2025, show developers buzzing about efficiency gains—think 70% faster response times and half the usual lag. With climate concerns rising, Pruna’s green angle (cutting energy use by up to 91%, per their claims) adds extra appeal. It’s not just tech—it’s a movement.

Who’s Jumping on Board?

Pruna AI’s AI open source framework is already making waves. Companies like Scenario and PhotoRoom—big in image and video generation—are early fans, using it to streamline their workflows. Midsize firms with custom AI setups are also hopping on, drawn by the promise of slashing computing bills. Even solo developers are digging in, with Pruna’s GitHub repo lighting up since the March 20 drop.

The community vibe is strong, too. Pruna’s team—boasting over 300 research papers—built this for real-world use, not just headlines. Their docs and tutorials make it easy to start, whether you’re optimizing a chatbot or a vision model. It’s AI open source with a human touch.

Pruna AI vs the Competition

How does Pruna stack up in the AI open source arena? Let’s compare:

- Hugging Face: A giant in model hosting, Hugging Face standardizes how models are shared. Pruna AI takes it further by optimizing those models for speed and size—think of it as Hugging Face’s efficiency sidekick.

- vLLM: This AI open source tool speeds up language models, but it’s narrower than Pruna’s all-in-one approach. Pruna handles everything from LLMs to diffusion models.

- Proprietary Tools: Big players like OpenAI offer slick solutions but are costly and closed off. Pruna’s free, open framework gives you control without the lock-in.

Pruna AI’s edge? It’s a one-stop shop for efficiency, blending multiple methods into a powerful and free package.

Real-World Wins

The proof is in the numbers. Pruna AI’s framework has already delivered jaw-dropping results. A Llama model went from bulky to bite-sized—eight times smaller—while keeping its smarts. For image models like Stable Diffusion, Pruna’s caching and quantization combo shaved off latency and memory use, making them viable on modest GPUs. That’s a stunning win for developers who can’t afford top-tier hardware.

Businesses love it, too. By cutting inference costs (the price of running AI), Pruna’s AI open source tool pays for itself. A TechCrunch report from March 20, 2025, notes midsize firms saving big while startups get a leg up without investor-sized budgets.

Benefits for Everyday Users

You don’t need to be a tech guru to see the upside of this AI open source release:

- Cost Cuts: Smaller models mean less spending on cloud servers or GPUs.

- Speed Jumps: Faster inference keeps apps and services snappy.

- Green Gains: Less energy use is a win for the planet.

- SEO Boost: For content creators, optimized AI means quicker, fresher output to climb search rankings.

Imagine a blogger churning out posts twice as fast or a small shop personalizing ads on a dime. That’s Pruna AI in action.

Challenges to Consider

It’s not all smooth sailing. Pruna’s AI open source framework needs some tech know-how—think Python skills and a bit of patience for setup. It shines brightest with custom models so that off-the-shelf users might need extra tweaking. And while it’s free, the Pro version (with perks like the compression agent) costs by the hour, which could add up for heavy users.

Still, the open-source community and Pruna’s support—like Discord chats and detailed guides—help ease the bumps.

What’s Next for Pruna AI?

Pruna AI isn’t resting on this win. With $6.5 million from a seed round last fall (led by EQT Ventures), they’re hiring more brains to grow the team. The compression agent—set to launch soon—will let users say, “Make it fast, keep it accurate,” and watch the magic happen. Future updates might expand to more model types or hardware, cementing Pruna’s AI open source lead in 2025.

Final Thoughts

Pruna AI’s AI open source release on March 20, 2025, is a stunning victory for efficiency. By sharing its optimization framework, Pruna is handing developers and businesses a free, powerful tool to master AI without the usual costs or complexity. It’s fast, green, and open to all—everything 2025’s AI scene needs. Whether coding for fun or running a company, this is your chance to jump in and ride the wave. Pruna AI just proved that big wins don’t need big price tags.