Explainable AI (XAI):

The year is 2024. AI permeates our lives, influencing everything from loan approvals to self-driving cars. But as AI’s tentacles reach, a crucial question echoes: can we trust these black boxes? Enter Explainable AI (XAI), a burgeoning field dedicated to demystifying AI’s decisions, building trust, and ensuring responsible AI development.

Why XAI Matters Now More Than Ever:

Imagine an AI denying you a loan for seemingly unknowable reasons. Or a self-driving car veering off course, leaving you bewildered and questioning its actions. These scenarios highlight the dangers of opacity in AI. Without XAI, we’re left in the dark, vulnerable to biases, errors, and potential manipulation.

XAI seeks to illuminate these black boxes, explaining AI’s choices. This is more than just understanding; it’s about building trust, mitigating bias, and ensuring ethical AI development.

Demystifying the Black Box: Approaches to XAI:

XAI isn’t a singular tool; it’s a toolbox brimming with approaches to explainability. Here are a few key players:

- Model-agnostic explanations (LIME): These methods explain any AI model, regardless of its internal workings. Imagine peeling back an onion layer by layer to understand the factors influencing an AI’s decision.

- Feature importance: This approach highlights the features that contribute most to an AI’s output. Think of it as identifying the key ingredients in a recipe that create the final dish.

- Counterfactual explanations: These methods ask “what if?” questions, showing how alternative scenarios might have changed the AI’s decision. Imagine rewinding time and exploring different choices to understand the reasoning behind the original outcome.

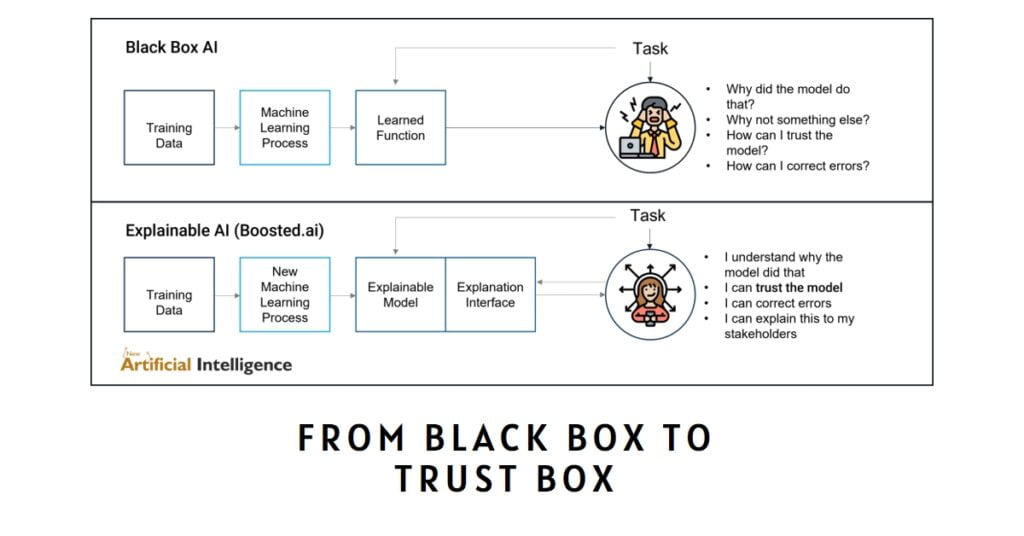

Case Study: XAI in Action – From Black Box to Trust Box:

Imagine a healthcare AI recommending surgery. Traditionally, a patient faces blind acceptance or skepticism. With XAI, the AI can explain which patient data points influenced its recommendation and provide alternative treatment options if the recommended surgery carries high risks. This transparency empowers patients to make informed decisions and builds trust in the AI’s judgment.

XAI Challenges and the Road Ahead:

While XAI holds immense promise, challenges remain. Explainability techniques can be complex, computationally expensive, and sometimes limited in their ability to explain even the most sophisticated AI models. Furthermore, defining a “good” explanation is subjective and context-dependent.

Despite these hurdles, the XAI field is rapidly evolving. Researchers are developing new methodologies, and user-friendly tools are becoming accessible. Additionally, ethical guidelines and regulations are emerging to ensure responsible AI development, where explainability is a cornerstone.

Building a Future with XAI:

As we embrace the potential of AI, XAI stands as a critical safeguard. It fosters trust, mitigates bias, and empowers humans to understand and navigate the increasingly complex world of AI. Here’s how you can champion XAI:

- Demand transparency in AI-powered systems you interact with. Ask questions, seek explanations, and encourage organizations to prioritize XAI.

- Support research and development in XAI technologies. Engage with the growing XAI community and contribute to advancing this crucial field.

- Advocate for ethical AI development that prioritizes explainability and accountability. Let your voice be heard and help shape a future where AI serves humanity transparently and responsibly.

Beyond the Explanations: XAI’s Broader Impact

While XAI primarily focuses on demystifying individual AI decisions, its impact extends beyond mere explanations. Here are some broader ramifications of a world-embracing XAI:

- Building a Just and Equitable Society: XAI can help identify and mitigate biases embedded in AI algorithms, promoting fairness in areas like loan approvals, hiring decisions, and criminal justice. By uncovering biases, stakeholders can take steps to address them and ensure AI serves everyone equally.

- Fostering Human-AI Collaboration: When we understand how AI models work, we can better collaborate with them, leveraging their strengths while compensating for their limitations. This collaborative approach opens doors to solving complex challenges in healthcare, climate change, and scientific research.

- Empowering Responsible AI Development: XAI can hold developers and corporations accountable for the decisions of their AI models. Transparency encourages responsible development practices, prioritizing ethical considerations alongside technological advancements.

- Cultivating Informed Public Discourse: As XAI demystifies AI, public discussions about its role in society can become more informed and nuanced. Citizens empowered with understanding can actively shape how AI is developed and deployed, ensuring it aligns with societal values and priorities.

Case Study: XAI in Action – Bridging the Gap Between Humans and Machines:

Imagine a team of researchers using XAI to understand how an AI model predicts crop yields. By analyzing the model’s explanations, they discover it’s heavily influenced by historical data, potentially overlooking climate change factors. This insight allows them to refine the model, leading to more accurate predictions and informed agricultural strategies.

The Future of XAI: Collaboration and Innovation:

XAI is in its infancy, but its potential is undeniable. As the field evolves, we can expect advancements in:

- Interpretable AI models: Designing AI models that are inherently explainable, reducing the need for post-hoc explanation techniques.

- Interactive visualization tools: Developing user-friendly interfaces that allow anyone to explore and understand AI explanations, regardless of technical expertise.

- Standardized XAI guidelines: Establishing best practices and ethical frameworks for explainable AI development and deployment.

Together, We Can Unlock the Potential of XAI:

Building a future powered by explainable AI demands a collective effort. Researchers, developers, policymakers, and individuals must collaborate to overcome technical challenges, promote responsible development, and ensure public trust.

Embrace the challenge, champion transparency, and advocate for XAI. Through our collaborative efforts, we can open the vast potential of AI while protecting the values that define humankind. Let’s paint a future where AI empowers, informs, and guides us, all bathed in the illuminating light of Explainable AI.

Remember, the future of AI is in our hands. Let’s shape it with trust, transparency, and XAI as our guiding lights.

FAQs:

Is XAI just for technical experts?

Not! While the technical underpinnings of XAI can be complex, the need for understanding AI decisions is universal. XAI tools and explanations are becoming increasingly user-friendly, allowing anyone to explore and grasp the reasoning behind AI models. So, whether you’re a data scientist or a curious citizen, XAI welcomes you with open arms (and explanations!).

What are the limitations of XAI?

Like any emerging field, XAI faces challenges. Explaining complex AI models can be computationally expensive, and sometimes, even the most sophisticated techniques might need help to unravel the reasoning behind certain decisions fully. However, research is rapidly advancing, and the field constantly evolves to overcome these limitations. Remember, XAI is a journey, not a destination, and progress is made daily.

How can I get involved in XAI?

There are plenty of ways to contribute to the XAI revolution! Stay informed by following XAI research blogs and communities, participating in discussions about ethical AI development, and advocating for transparency in AI-powered systems you encounter. You can even get your hands dirty by learning about user-friendly XAI tools and exploring explanations for yourself. Every action, big or small, contributes to building a future where AI is explainable, accountable, and beneficial to all.

2 thoughts on “Explainable AI (XAI): Building Trust and Transparency in 2024”